In the Prosus AI team, we continuously explore how new AI capabilities can be used to help solve real problems for the 2 billion users we collectively serve across companies in the Prosus Group. So too with AI-based agents, the next promising frontier for GenAI. We’ve built and tested AI agents for a wide range of use cases, from conversational data analysis to educational tutors and intelligent marketplace assistants that lead the buying and selling experience for food and groceries ordering, ecommerce and second-hand marketplaces.

We've learned that building useful agents is hard, but when they do work, they can be hugely valuable and allow re-inventing the user experience. Below we share some of our learnings as well as an overview of the agent & tooling ecosystem (AgentOps Landscape) created together with our Prosus Ventures team, while building our own agents.

What’s in an agent?

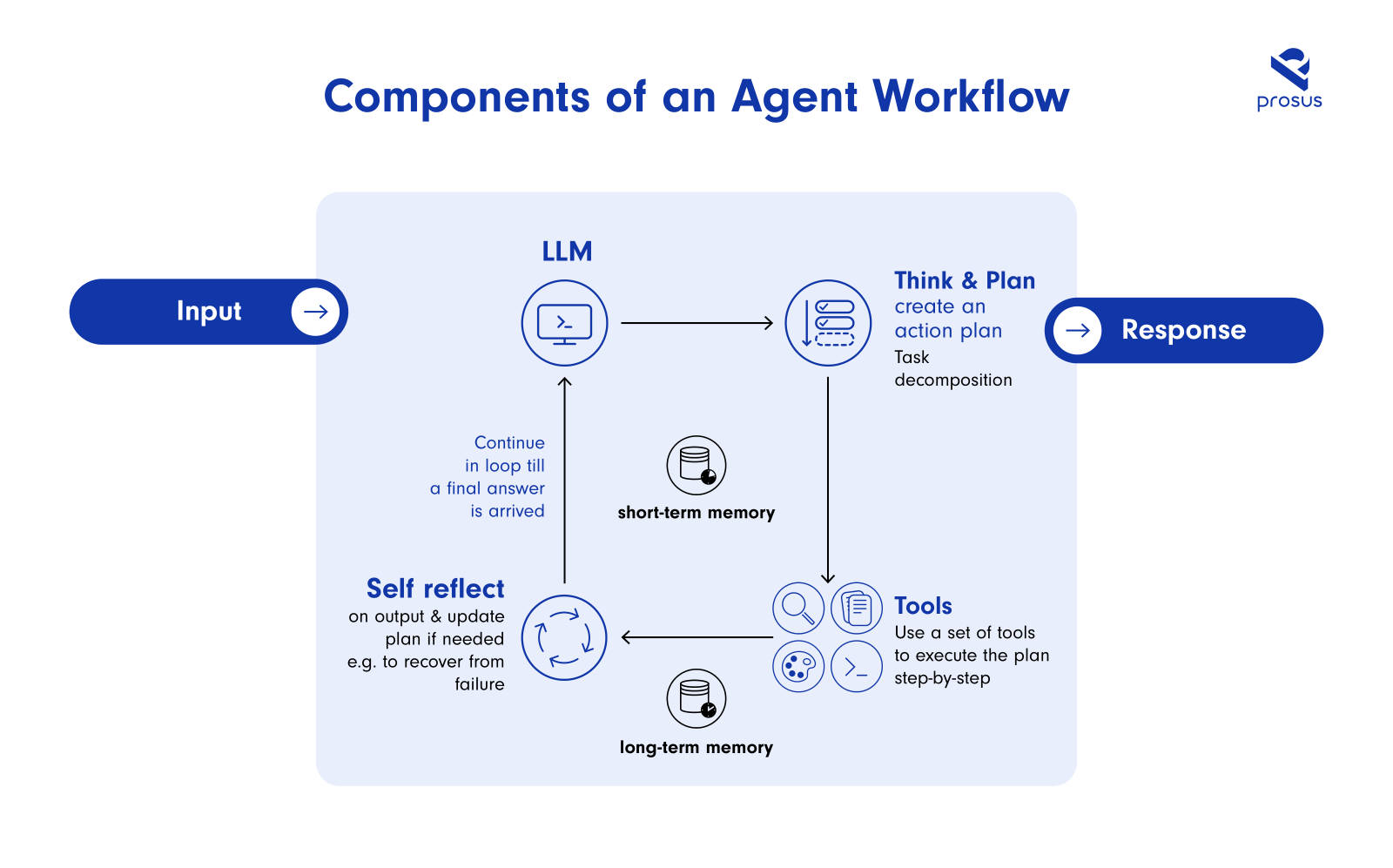

Key to any conversation about agentic systems is a common understanding of exactly what they are. Broadly, agents are AI systems that can make decisions and take actions on their own following general instructions from a user. They tend to have four main components:

- Powerful large language model (LLM) which understands the user’s intent and creates an action plan based on the objective and the tools the agent has access to.

- Tools that add additional capabilities over the core LLM such as web search, document retrieval, code execution, database integration and maybe include other AI models. These tools enable the agent to execute actions like creating a document, executing database queries, creating a chart, etc.

- Memory that includes access to relevant knowledge such as databases (long-term memory) and the ability to retain request-specific information over the multiple steps it may take to complete the action plan (short-term memory).

- Reflection and self-critiquing: more advanced agents also have the ability to spot and correct mistakes they might make as they execute their action plan and reprioritize steps.

Agentic systems such as our own Toqan agent are a stepping stone to more capable (autonomous) systems, and we work to understand and continue to advocate for how to make them useful and safe.

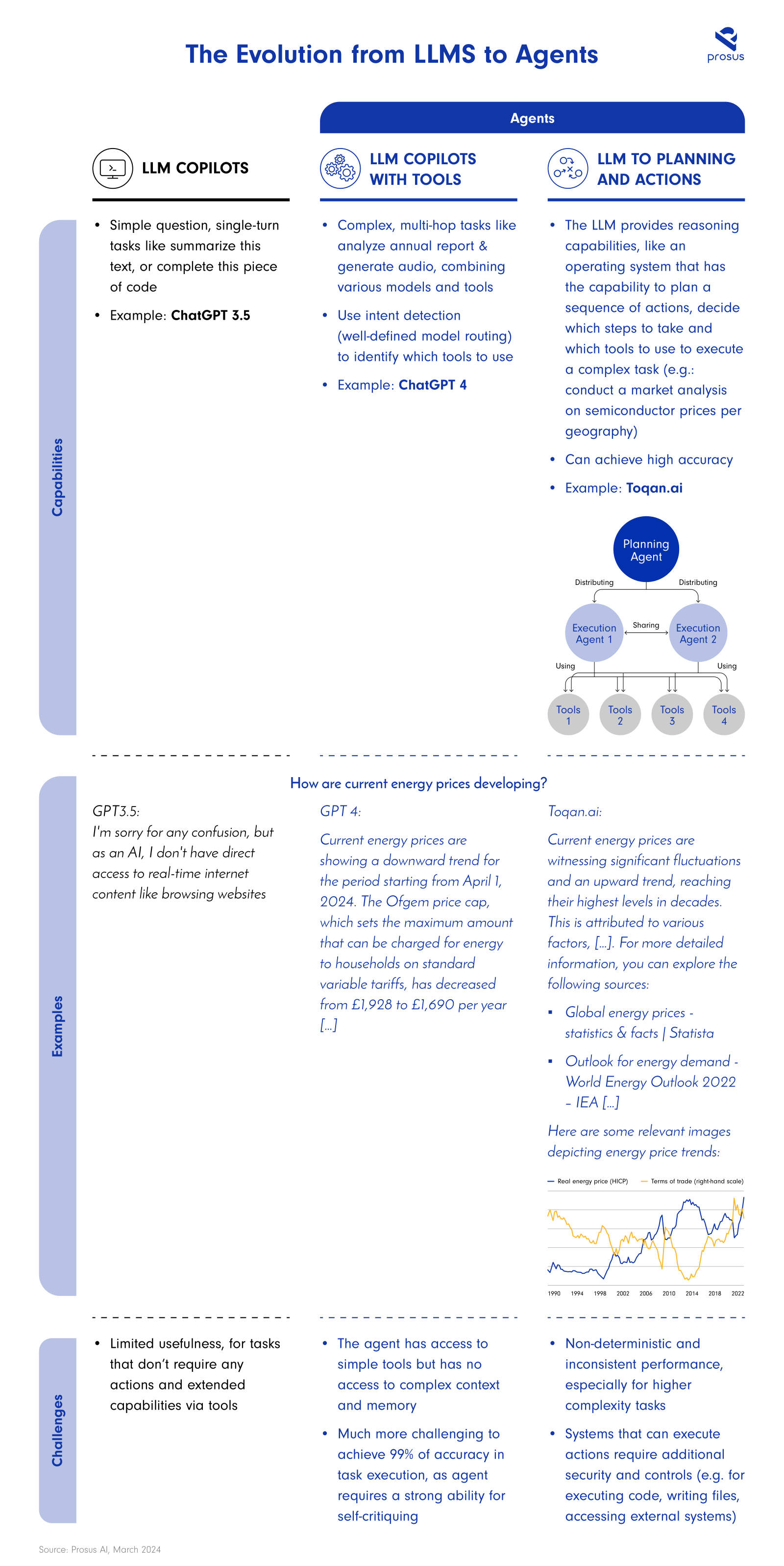

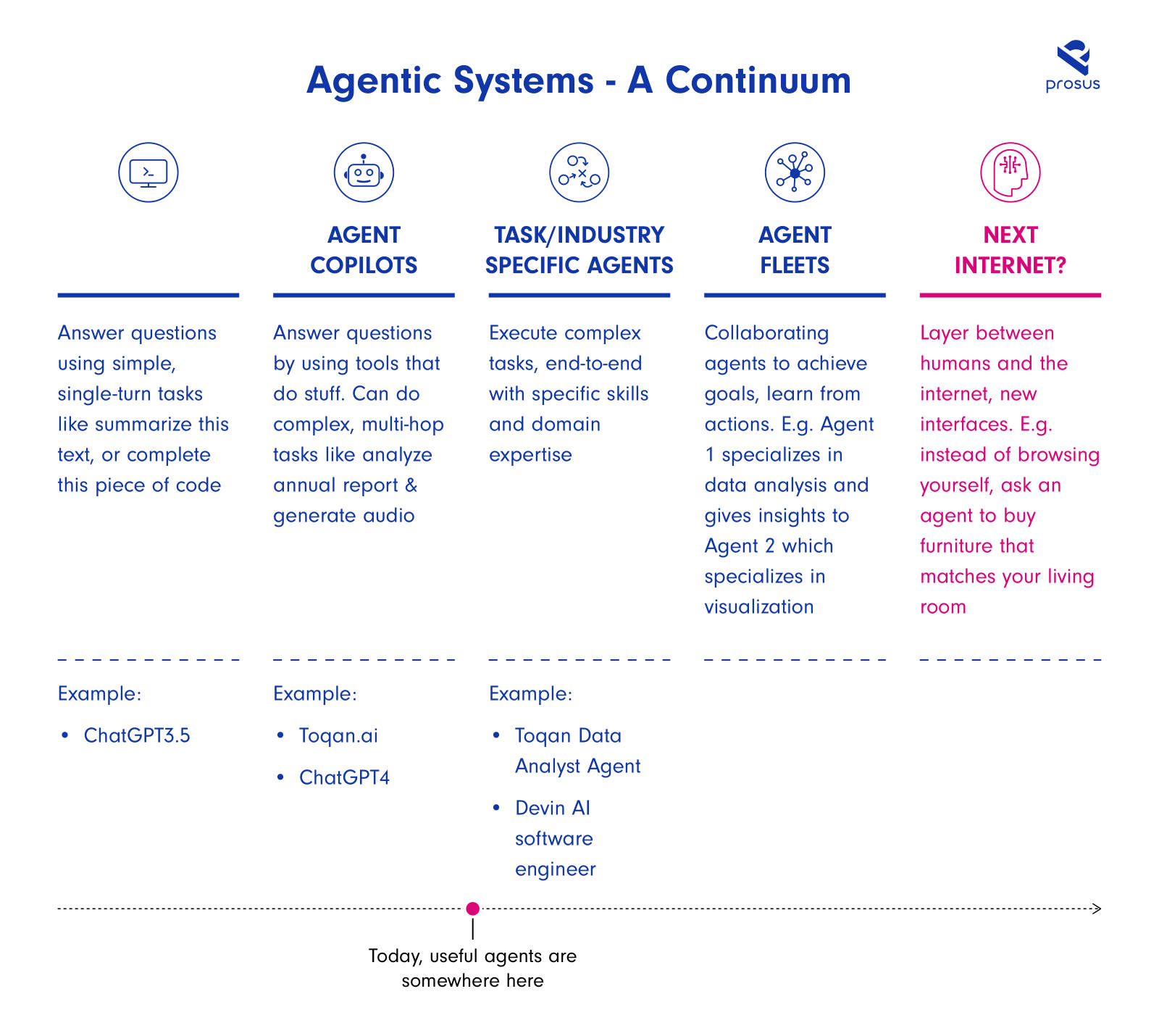

Agents can come in various degrees of sophistication, depending on the quantity and quality of the tools, as well the LLM used, and the constraints and controls placed on workflows created by the agents. See below (Evolution from LLMs to Agents), a comparison of a single-turn chatbot to two agents.

Why we build agentic systems

The drive to create agentic systems is grounded in the shortcomings of existing AI Copilots. As of today most AI co-pilots are still limited to single-turn tasks like "summarize this text", or "complete this piece of code".

Agents on the other hand promise to complete more complex, multi-hop tasks such as: "find me the best running shoe and buy it for me", "analyze this annual report to provide a perspective on the growth potential of this company", or "provide an overview of the market for wearables including our own internal revenue data". The impact versus simple co-pilots can be seen in the answers each can provide to the same basic request.

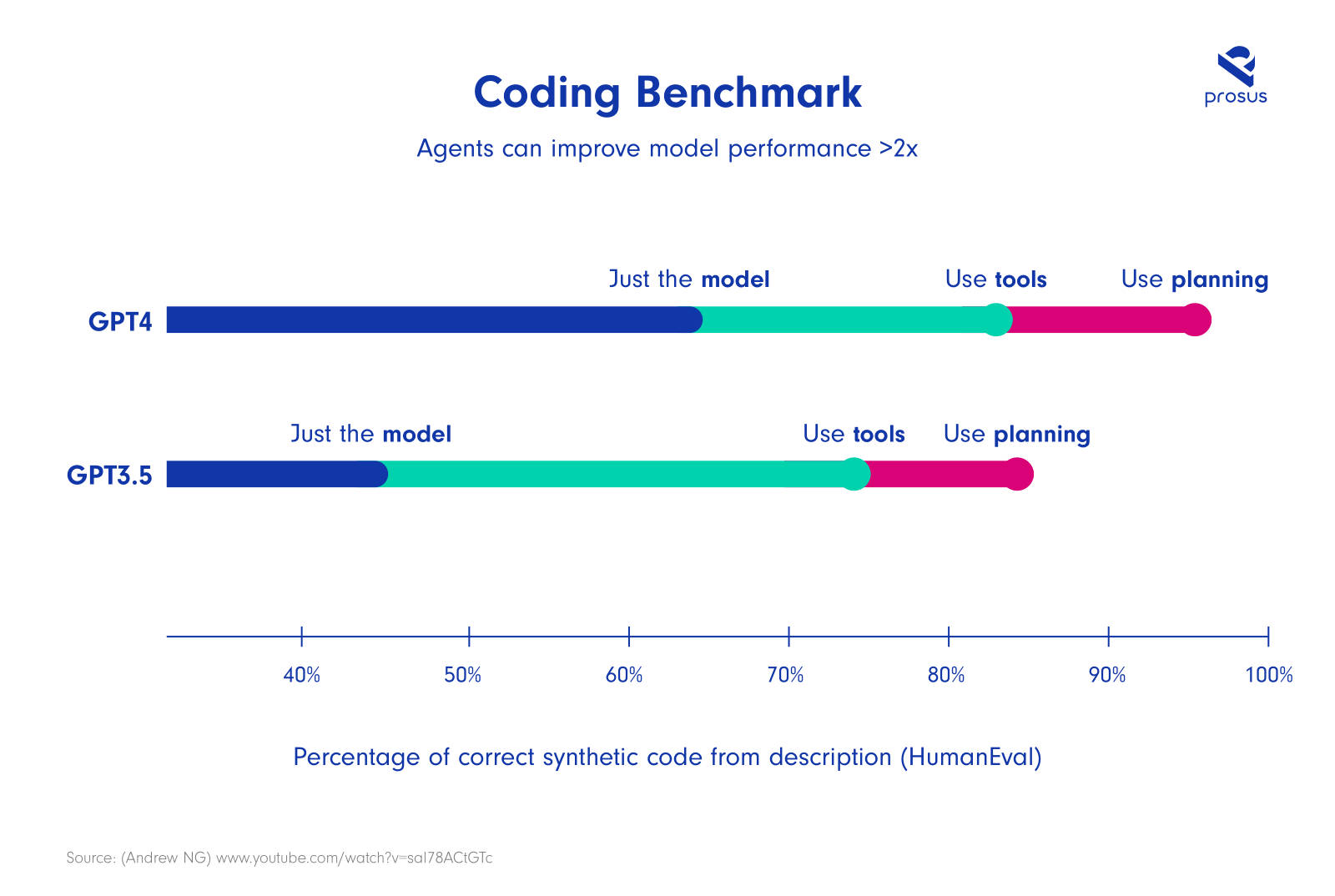

More comprehensive answers could provide a reason for building agents on its own. However, as an added value, agents can also quantifiably improve the quality of results, as was recently described by Andrew Ng. The chart below (Coding Benchmark) shows that using GPT3.5 with agentic capabilities can comfortably beat a much larger model such as GPT-4 –one of today’s most powerful models – at a complex coding task. When just using the model, GPT3.5 significantly underperforms at the same task compared to its more capable larger brother.

Not all plain sailing

Of course, that begs the question that if agents are so great, why doesn’t all AI interaction use agents already? Despite the progress, the journey towards fully realizing the potential of AI agents with large LLMs is new and is filled with challenges that pose significant hurdles to building useful and reliable agentic systems. We see the challenges are broadly in three categories: technology readiness, scalability of agentic systems, tooling and integrations.

Opportunities with task- and industry-specific agents

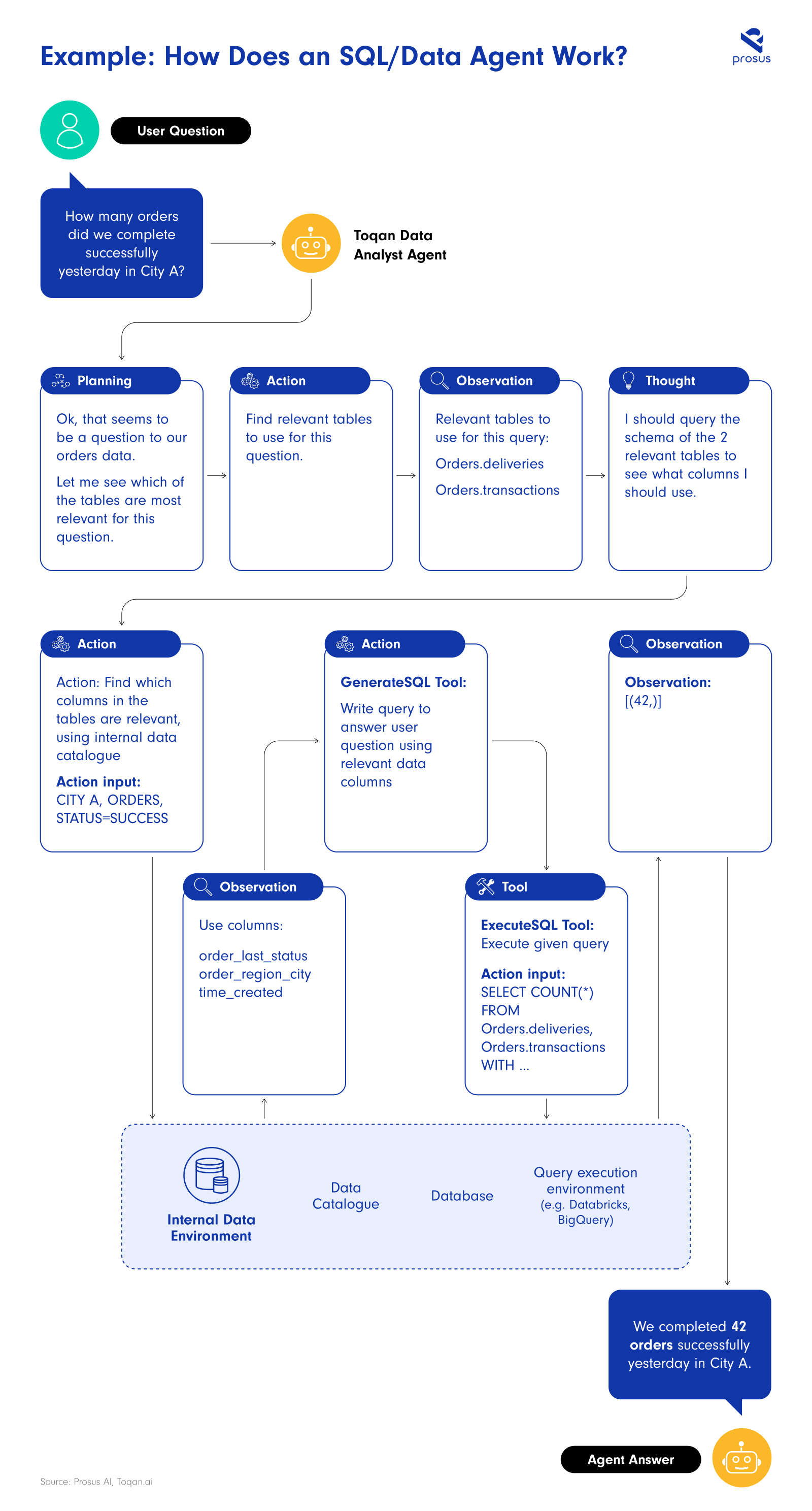

We experienced that agents usually get better as they are built for a specific domain or a narrower set of tasks. As a result, while the landscape is still evolving, we are increasingly excited about task- and industry-specific agents which promise to offer tailored solutions that address specific challenges and requirements and help resolve some of the issues faced when building agents. One such example is a task-specific agent we built to assist with conversational data analysis to give anyone in an organization access to internal data without the need to know how to query databases.

Getting the right information at the right time, to enable fact-based decision making because often the data sits in internal databases and requires data analysts who understand the data sources, to write queries to extract the data from the relevant databases – this is a complex workflow. With task-specific agents focused on areas like data-analysis, it becomes easier to search through information, access databases, evaluate the relevance of the information, and synthesize it to answer a user's question. We used that approach when building our Toqan data analyst agent below. This is how it works:

Using this workflow and tuning it over time, we now have a scalable framework that improved upon answer accuracy significantly, from 50% perceived accuracy to up to 100% on specific high-impact use cases. Keep an eye out for a future blog post about our technical learnings from building the Toqan Data Analyst.

The future is… AgentsOps?

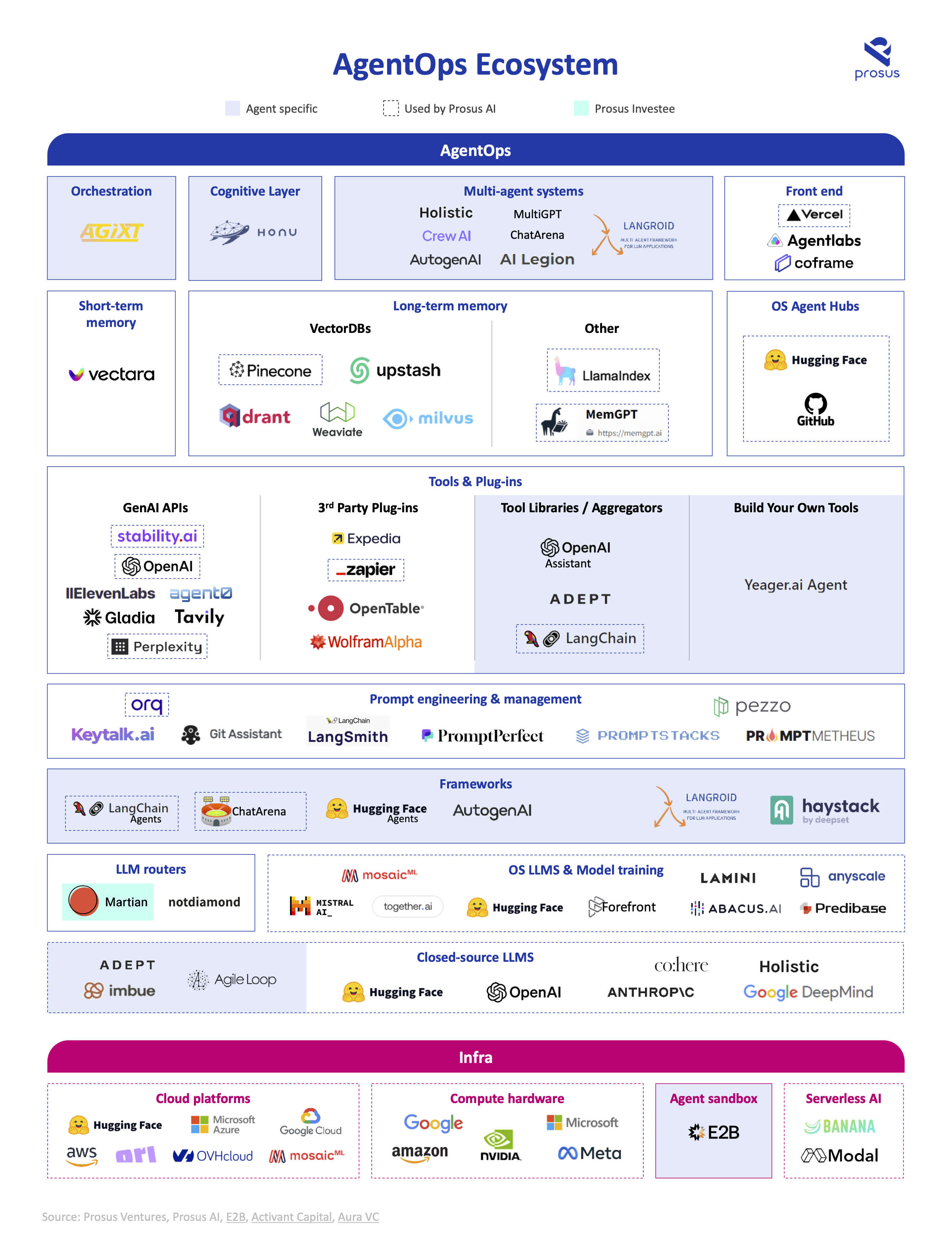

As we’ve discussed, building agent systems entails more than simply prompt engineering a powerful LLM – though it is ongoing advancements in model training like function calling (which makes it possible to call external tools) and more powerful LLMs which can reason and plan that make agents possible. Creating an effective agent requires building tools for the agent to access (e.g. writing and executing their own code, browsing the web, writing and reading databases), building execution environments, integrating with systems, enabling a level of planning & self-reflection, and more.

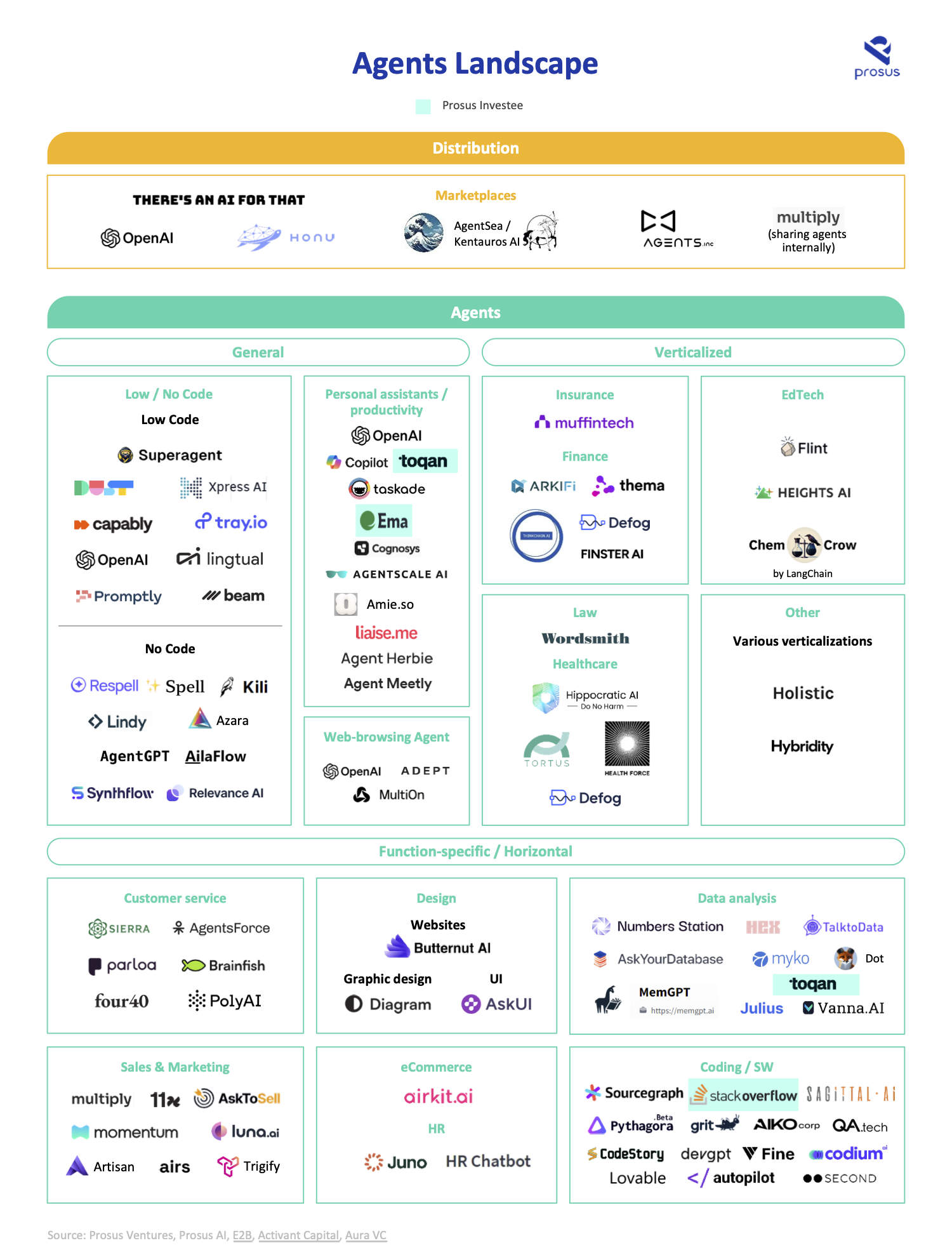

Due to the complexity of these agentic systems, the concept of AgentOps has emerged as a critical area of focus. AgentOps aims to reduce technical barriers to building and scaling AI agents by providing a set of pre-built capabilities and tools that can be pieced together to make it easier to create more sophisticated and efficient agentic systems. For anyone building agents, monitoring the AgentOps landscape will be crucial for understanding what technological advancements become available to further empower AI agents and expand their capabilities.

As we built Toqan and other agent-based systems we frequently found ourselves solving hard technical problems and looking for tools to build with. As a result, we put together the AgentOps landscape together with our Prosus Ventures team to highlight some of the tools we considered - see below. We hope this is a useful guide for others interested in following Agents.

Just the beginning

While it may not feel like it to those of us building the systems, AI agents are still very much in their infancy. The journey towards building effective and ubiquitous autonomous AI agents is still one of continuous exploration and innovation. As we navigate the complexities and challenges of productizing these agents, the potential for transformative change is increasingly evident. By understanding the current landscape, categorizing agents based on their focus areas, and keeping a close eye on the development of AgentOps, we can anticipate the exciting advancements that lie ahead in the world of autonomous AI agents.

We expect that agents will appear in co-pilots and AI Assistants during this year and will become mainstream for experimentation and for non-mission critical applications such as market research, data visualization, online shopping assistants. For our team, with Toqan and the underlying tooling we have put together, our aim is to stay ahead of the curve on agents, as we selectively graduate our research on GenAI and agents into future versions of the Toqan Agent and into the products of the Prosus portfolio companies.

Addendum - Market Landscape

In addition to the survey of AgentOps tools, we have also developed a market landscape for existing agents to illustrate the emerging ecosystem. We think that together they can provide a useful guide for others interested in following agents. If you think there are other companies and tools we should add to either landscape, please contact us at paul@toqan.ai, tania.hoeding@prosus.com, or sandeep.bakshi@prosus.com.